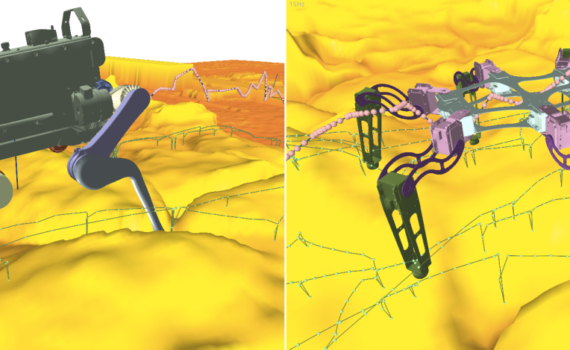

Mastering rough terrain locomotion is a tough challenge for robots due to its dynamic, unpredictable nature and frequent physical contact. Traditionally, robots rely on carefully planned foot placements to maintain grip and stability. Recent advancements in quadruped robot feet offer diverse shapes and high grip for various terrains. However, control […]

Media

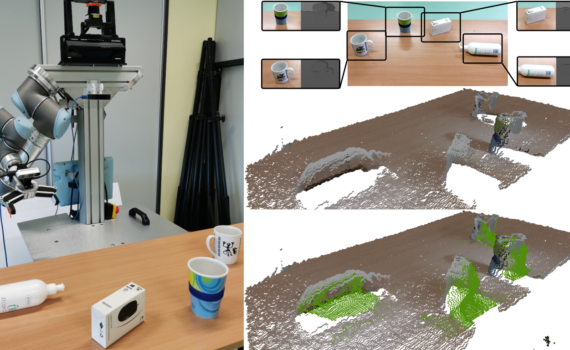

Robots face challenges in perceiving new scenes, particularly when registering objects from a single perspective, resulting in incomplete shape information about objects. Partial object models negatively influence the performance of grasping methods. To address this, robots can scan the scene from various perspectives or employ methods to directly fill in […]

We are happy to announce that our paper “On the Importance of the RGB-D Sensor Model in the CNN-based Robotic Perception” prepared by Mikołaj Zieliński and Dominik Belter won the best paper award of Robotics and Autonomous Systems Session at PP-RAI 2023.

In this research, we investigate the problem of state estimation of rotational articulated objects during robotic interaction. We estimate the position of a joint axis and the current rotation of an object from a pair of RGB-D images registered by the depth camera mounted on the robot. However, the camera […]

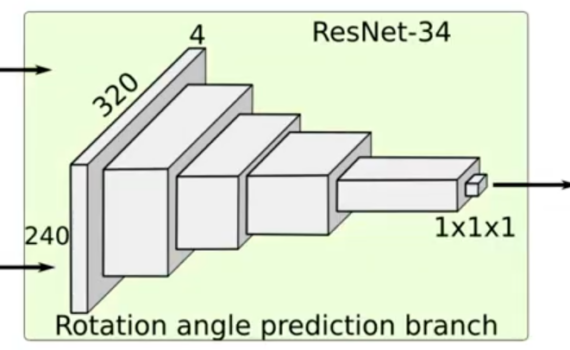

Robots have limited perception capabilities when observing a new scene. When the objects on the scene are registered from a single perspective, only partial information about the shape of the objects is registered. Incomplete models of objects influence the performance of grasping methods. In this case, the robot should scan the scene from other perspectives to collect information about the objects or use methods that fill in unknown regions of the scene. The CNN-based method for objects reconstruction from a single view utilize 3D structures like point clouds or 3D grids. In this research, we revisit the problem of scene reconstruction and show that scene reconstruction can be formulated in the 2D image space. We propose a new representation of the scene reconstruction problem for a robot equipped with an RGB-D camera. Then, we present a method that generates a depth image of the object from the pose of the camera that is on the other side of the scene. We show how to train a neural network to obtain accurate depth images of the objects and reconstruct a 3D model of the scene observed from a single viewpoint. Moreover, we show that the obtained model can be applied to improve the success rate of the grasping method.

In this paper, we deal with the problem of full-body path planning for walking robots. The state of walking robots is defined in multi-dimensional space. Path planning requires defining the path of the feet and the robot's body. Moreover, the planner should check multiple constraints like static stability, self-collisions, collisions with the terrain, and the legs workspace. As a result, checking the feasibility of the potential path is time-consuming and influences the performance of a planning method. In this paper, we verify the feasibility of sampling-based planners in the path planning task of walking robots. We identify the strengths and weaknesses of the existing planners. Finally, we propose a new planning method that improves the performance of path planning of legged robots.