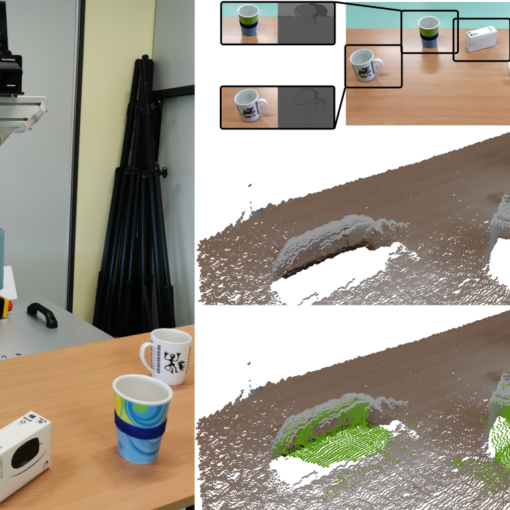

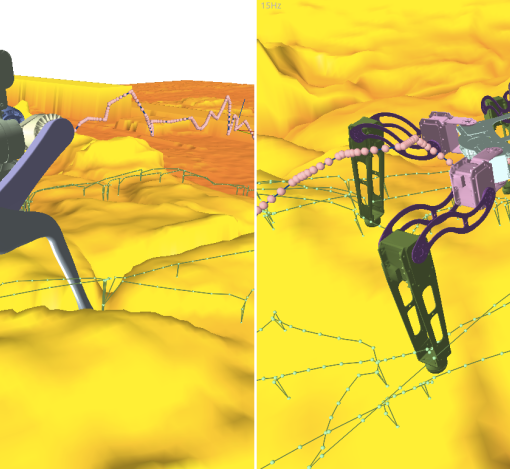

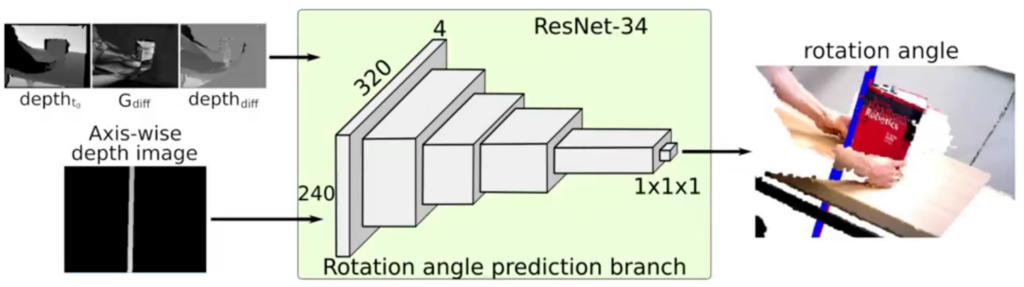

In this research, we investigate the problem of state estimation of rotational articulated objects during robotic interaction. We estimate the position of a joint axis and the current rotation of an object from a pair of RGB-D images registered by the depth camera mounted on the robot. However, the camera mounted on the robot has limited view due to occlusions of the robot’s arm. Moreover, some configurations of objects are difficult to register by typical RGB-D sensors. Thus, the model-based methods fail in these cases. To deal with this problem, we propose a CNN-based architecture that gradually estimates the parameters and the state of the joint. To meet real-time requirements on the real robot, we propose a fast inference on 2D images without directly operating on the 3D model of the object. The proposed method is trained and verified on the RBO dataset that contains RGB-D sequences of manipulated articulated objects.

[1] Kamil Młodzikowski, Dominik Belter, CNN-based Joint State Estimation During Robotic Interaction with Articulated Objects, Proceedings of the 17th International Conference on Control, Automation, Robotics and Vision (ICARCV), December 11-13, 2022, Singapore, s. 78-83, 2022

[2] Kamil Młodzikowski, Dominik Belter, CNNs for State Estimation of Articulated Objects, Proceedings of the 3rd Polish Conference on Artificial Intelligence, April 25-27, 2022, Gdynia, Poland, s. 112-115, 2022